Eyes, JAPAN

Use of datasets from Kaggle API in Google Colab notebook

Cherubin Mugisha

-

Introduction

Kaggle and Google Colab are one the best combinations for most of the data scientist apprentices.

Kaggle API contains thousands of publicly available datasets while Colab notebook offers a way of interactively coding in python using cloud resources for free for a limited time but mostly enough to play with a reasonable amount of data.

Kaggle hosts thousands of datasets with different data types such as structured tabular data, sound data, images, videos, and others. It also offers limited access to its computational resources through a jupyter notebook where you can get inspiration from more than 400,000 public notebooks for your data analysis and AI projects with python and R languages.

However, some users may prefer to instead use google Colab notebook for various reasons. In this post, I will take you through the way of connecting those 2 APIs by using data from Kaggle with a Colab notebook.

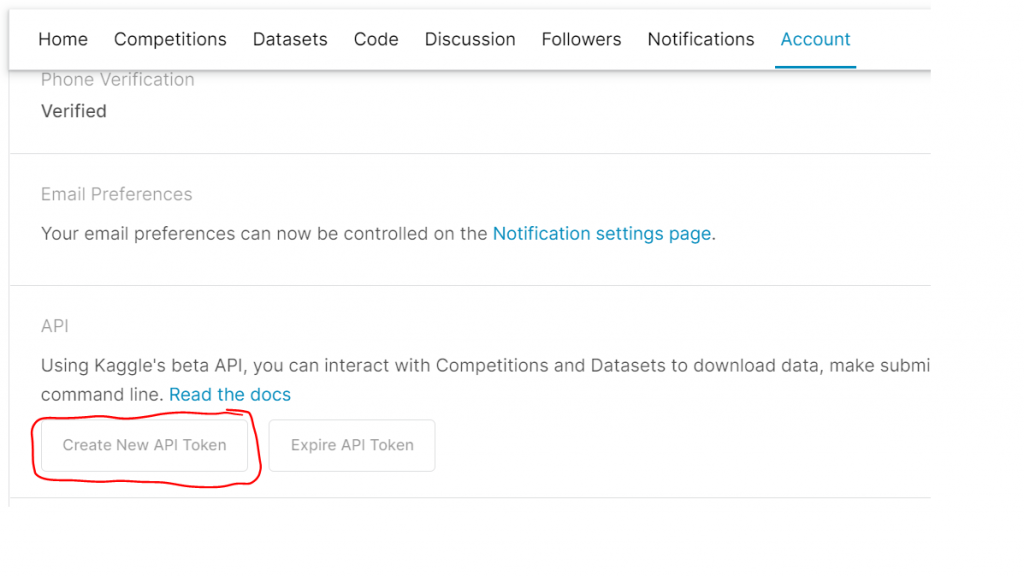

2. Get the Kaggle API credentials

First, you need to sign in to your Kaggle account. From your account settings, download your API token Kaggle.json onto your local folder.

Once this step is done, move to your Colab notebook.

3. Colab notebook

Since the notebook uses a linux-based kernel, you can do everything with the command line, by always prefixing your commands with a “!”.

Before you import your credentials to your notebook, you need to install kaggle library by typing the following code in a new code cell.

! pip install kaggleThen, you can load your credential file into your kernel with:

from google.colab import files

uploaded = files.upload()

for fn in uploaded.keys():

print('User uploaded file "{name}" with length {length} bytes'.format(

name=fn, length=len(uploaded[fn])))

!mkdir -p ~/.kaggle/ && mv kaggle.json ~/.kaggle/ && chmod 600 ~/.kaggle/kaggle.jsonAn upload widget will appear allowing you to choose a file (kaggle.json) from your local folder to your kernel cloud storage. The last line of code will move the Kaggle.json file where the API expects to find it with chmod to change the access rights.

Once this is done, you can start dealing with the data.

Import a dataset from Kaggle

To use datasets from Kaggle directly to your Colab notebook, you need to understand that there are 2 types of datasets available on Kaggle.

- User datasets: This kind of data is from different users on Kaggle who uploaded their data to make them available to the public. The FIFA 22- Ultimate Team Dataset by Baran Baltaci could be an example.

- Competition datasets: These datasets are made public for competitions. They are maintained by Kaggle and the competition organizers. The NFL Health & Safety – Helmet Assignment hosted by National Football League is an ongoing competition

To download any of these datasets remains the same with minor differences.

For user datasets, it will be:

! kaggle datasets download <user_name/name-of-dataset>Using the previous example of FIFA 22-Ultimate Team Dataset, the code will be:

! kaggle datasets download baranb/fut-22-ultimate-team-dataset

For the competition datasets, the downloading code will be:

! kaggle competitions download -c nfl-health-and-safety-helmet-assignmentOnce the data is loaded, it can be unzipped by typing:

! unzip <name-of-the-file> Voilà! from here you’re good to go. The next step could be to walk you through the data reading and manipulation for JSON and CSV files since they are the most used by data scientists.

I hope this will help more than one.

2026/01/23

2026/01/23 2025/12/12

2025/12/12 2025/12/07

2025/12/07 2025/11/06

2025/11/06 2025/10/31

2025/10/31 2025/10/24

2025/10/24 2025/10/03

2025/10/03 2025/08/30

2025/08/30